After a five-year continuous run, What the Robot Saw’s cinemagical algorithms have been revamped for the online cinema of 2025.

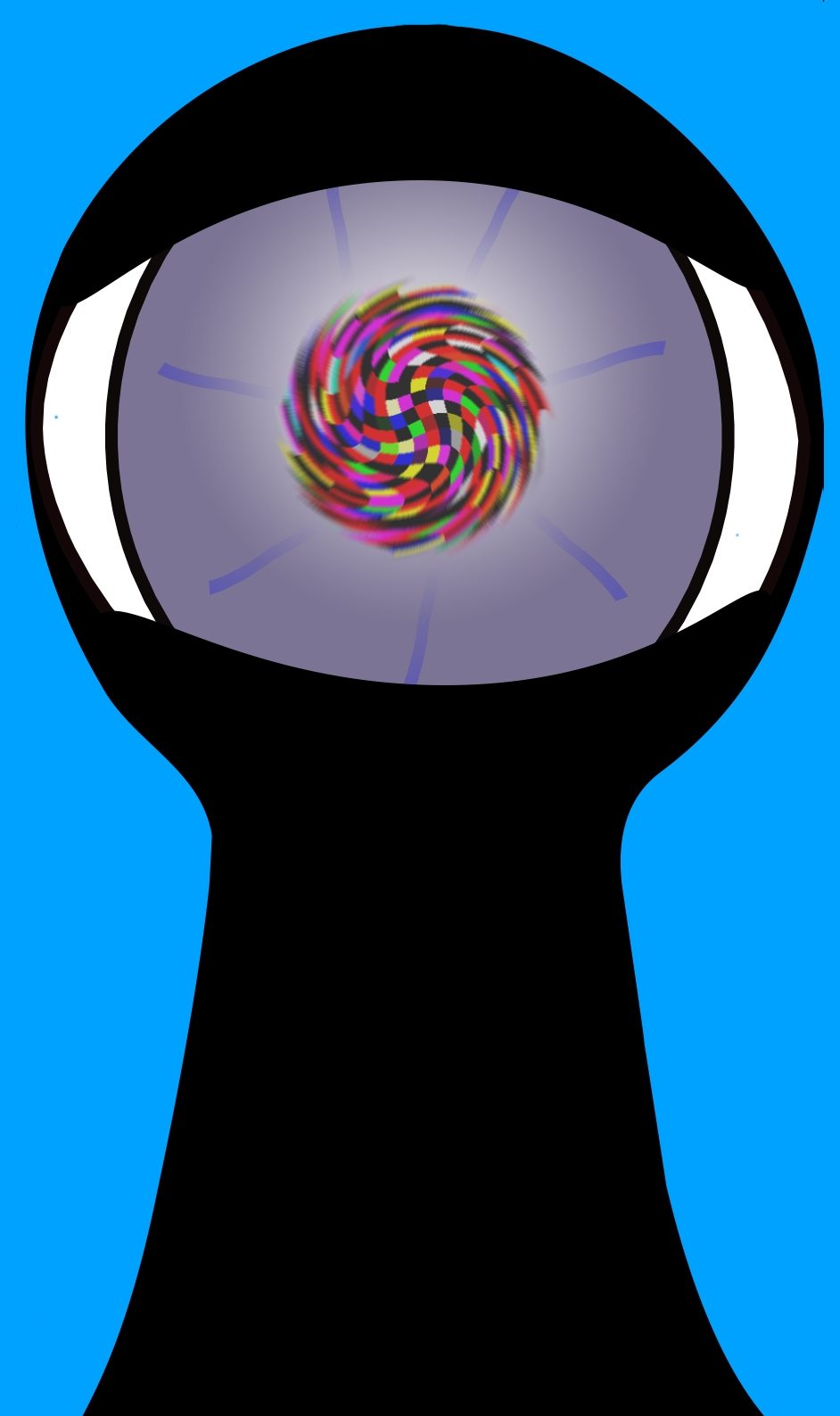

Welcome to the contrarian algorithm. In the new world order of robots and talking heads, a reality exists that’s part life, part cinema, part business and part algorithm. It’s a secret cinematic world seen only by robots — the software robots who analyze content that may never be viewed by human eyes.

‘What the Robot Saw’ is a live, continuously-generated, robot film, curated, analyzed and edited using contrarian search algorithms, computer vision, and neural-network-based image and sound classification. Reversing the conventional logic of social media algorithms, The Robot catches glimpses of some of the least viewed and subscribed recent videos on YouTube, featuring first person narratives by some of the people commercial algorithms conceal.

The Robot’s algorithms analyze, edit, mix, compile, and title the film as methodically as a robot might analyze its subjects: pixel by pixel and bit by bit. The film is a fanciful interpretation of the online zeitgeist where self-representation and performed selves meet market-driven classification and curation.

^^^Please turn on the sound.^^^ … ‘What the Robot Saw’ is intended for fullscreen viewing if you can…

If the stream’s not live, or to scrub through the live or archived videos, view them on Twitch. Or check out some clips.

Thrice daily intermissions currently last about 10 minutes; stream resumes on the hour.

Political trolls and online celebs gain attention because social media algorithms reward and amplify addictive videos. This process reflects back to us a distorted view of culture: “engagement” algorithms exacerbate divisiveness by design. The process also incentivizes the production of content likely to gain attention – for example, sensational content and demographically stereotypical content which is likely to gain advertisers. Thus, the algorithms perpetuate a feedback loop that amplifies public perceptions and encourages production of content that creators believe will garner subscribers and engagement.

What the Robot Saw’s contrarian ranking algorithms constantly curate some of the least attention-grabbing new videos on YouTube. Rendered largely invisible by social media ranking algorithms, they may only be seen by the algorithmic robots that rank them. The clips are selected from among the least viewed and subscribed YouTube videos uploaded in recent hours and days — videos buried by YouTube’s engagement-centered ranking algorithms.

What the Robot Saw’s computer vision facial and image analysis algorithms curate videos and study their subjects, continually assembling its film and identifying its “talking head” performers in a robofantastical cinematic style. The Robot titles its subjects robotically, employing Amazon Rekognition’s demographically-obsessed algorithms.

Revealing a mix of hopeful content creators, performed selves, personal documentaries, and obsessive surveillance algorithms, ‘What the Robot Saw’ enters into the complicated relationship between the world’s surveillant and curatorial AI robots and the humans who are both their subjects and their stars. It’s not about how robots actually see. ‘What the Robot Saw’ is a response to the awkward contemporary collision of online perceptions and the robots that curate what they can “see” but can never comprehend.

Humans have a complicated relationship with robots. An invisible audience of software robots continually analyze content on the Internet. Videos by non-“YouTube stars” that algorithms don’t promote to the top of the search rankings or the “recommended” sidebar may be seen by few or no human viewers. In ‘What the Robot Saw,’ the Robot is AI voyeur turned director: classifying and magnifying the online personas of the subjects of a never-ending film. A loose, stream-of-AI-“consciousness” narrative is developed as the Robot drifts through neural network-determined groupings.

Robot meets Resting Bitch Face and Other Adventures. As it makes its way through the film, the Robot adds lower third supered titles. Occasional vaguely poetic section titles are derived from the Robot’s image recognition-based groupings. More often, lower-third supers identify the many “talking heads” who appear in the film. The identifiers – labels like “Confused-Looking Female, age 22-34” – are generated using Amazon Rekognition, a popular commercial face recognition and analysis library. (The Robot precedes descriptions that have somewhat weaker confidence scores with qualifiers like “Arguably.”) The feature set of Rekognition offers a glimpse into how computer vision robots, marketers, and others drawn to off-the-shelf face analysis products often choose to categorize humans: age, gender, and appearance of emotion are key. The prominence of these software features suggest that in a robot-centric world, these attributes might better identify us than our names. (Of course Amazon itself is quite a bit more granular in its identification techniques.) The subjects’ appearance and demeanor on video as fully human beings humorously defy Rekognition’s surveillance and marketing enamored perceptions.

What the Robot Saw’s title is a reference to What the Butler Saw films. Neither the butlers nor the Robot could really understand the objects of their obsession. Despite their self-satisfaction at what they’d “seen,” all they’d really gotten was a superficial, partial glimpse of a seductive peep show.

The live streams run throughout the day; there are brief “intermissions” every few hours (and as needed for maintenance.) Extensive archives auto-documenting previous streams — and thus a piece of YouTube in realtime — are available on the Videos page.

Selected clips are available on Twitch as well.

‘What the Robot Saw’ is a non-commercial project by Amy Alexander Running continuously since 2020, v 2.0 launched in 2025.

Bluesky/X: @uebergeek